When OpenAI first approached Microsoft for investment, following Musk’s exit in 2018, the team showed Bill Gates a robotic hand that had learned to solve a Rubik’s Cube through its own trial and error.

Gates shrugged.

Nor was he impressed by the company’s documentary, ‘Artificial Gamer’, which showed an OpenAI agent defeating the World Champions of DOTA 2, a strategy-based video game1.

Gates saw AI’s potential as a tool to support PhD-level research – not for playing childhood toys. The team finally won him over with a demonstration of GPT-2, which could just about summarise documents and answer questions.

Microsoft invested $1 billion in OpenAI on the potential they saw in the GPT model – and so began the relentless march to harness the potential of AI in advanced research2.

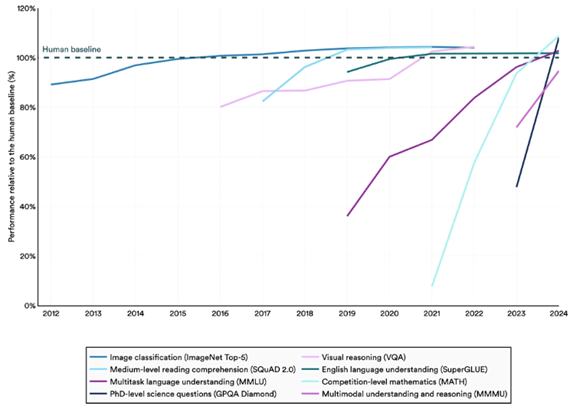

Six years later, GPT-5 is lightyears ahead of the model presented to Gates. And in 2024, it crossed a seminal milestone – reaching human ability in solving PhD-level science questions.

This could have massive implications across many areas of the economy. But one area which is especially ripe for AI-driven gains in advanced research is Healthcare.

Figure 1: Select AI Index technical performance benchmarks vs. human performance3

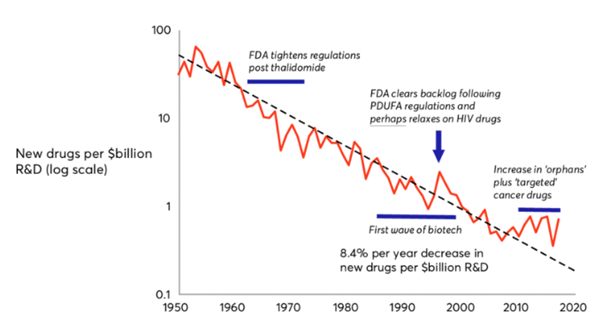

R&D productivity in pharma has been in consistent decline

R&D costs for pharmaceutical pipelines have been steadily rising over the last 10 years, and productivity has been falling.4

This is famously captured by ‘Eroom’s Law’, the observation that the number of new molecules approved by the FDA per $bn in R&D spending has been in steady decline since the 1950s.

Figure 2: Eroom’s Law: the number of new molecules approved by the FDA per $bn global R&D spending5

There is evidence that pharma companies are already beginning to adopt AI to solve this problem. The use cases we hear companies talk about the most relate to improvement of clinical trials – AI can enable better patient selection, trial design, and predictive modelling to reduce failures.

Meanwhile, a metastudy from this year finds evidence of big pharma using AI to speed up lead molecule and target identification, and using biodata to improve drugs’ safety profiles6.

However, due to the long lead times on new drug development (typically 10-15 years), and strict regulation, it may take time for us to see real evidence of this improving pharma innovation and R&D productivity.

MedTech is at the forefront of R&D adoption in health care

Medical devices, or MedTech, are not burdened by the same wicked R&D environment as their peers in the pharma industry.

A new medical device only takes around 3-7 years to reach approval in the US.

The regulatory standard is also much lower – most medical devices can be approved on the basis of showing ‘substantive equivalence’ to another device on the market. When evaluating new devices, clinical trials can be considered a ‘nice to have’7.

Applying AI to the development of medical devices therefore won’t be as transformative for improving R&D productivity compared to pharma.

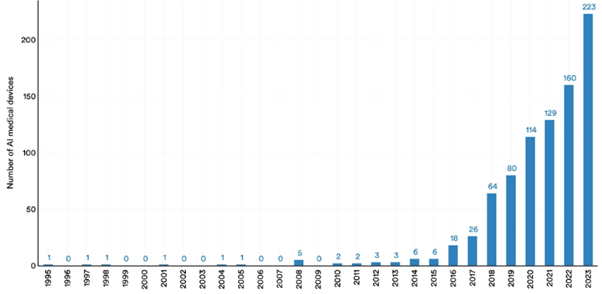

But the lower regulatory burden means we can already see evidence of AI-augmented medical devices entering patient care regimes, and adoption of this technology could still have the potential to transform patient outcomes.

Figure 3: Number of AI medical devices approved by the FDA, 1995-20238

Siemens Healthineers is already using AI to improve cancer treatment

We are already seeing evidence of this in our portfolio.

Siemens Healthineers is the global leader in imaging technology (like PET and CT scanners) and radiation oncology machines. They are at the forefront of leveraging AI to support early diagnosis, and better treatment, of diseases including cancer.

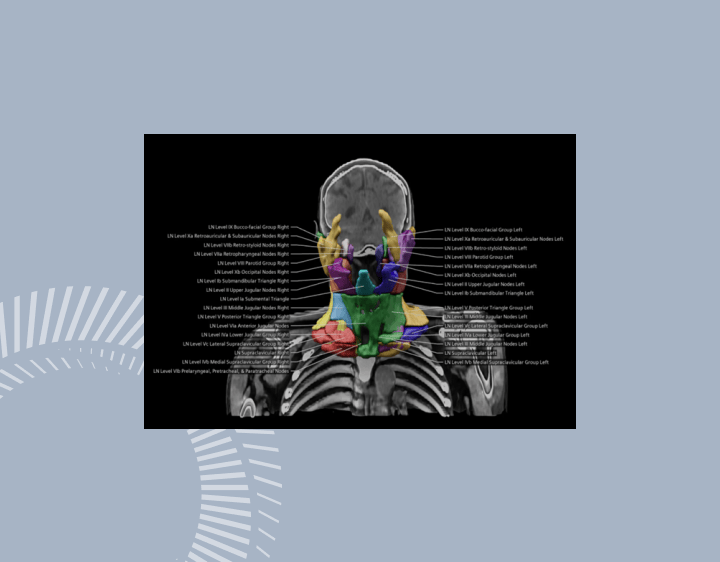

The FDA lists a raft of AI technologies, created by Siemens Healthineers, already approved for patient care9. One solution uses AI to provide automatic contouring of organs at risk of cancer. This has historically been a major bottleneck in radiation therapy planning as it is time-consuming for doctors, and errors are considered a high risk part of the radiotherapy process10.

This is just one example of how MedTech companies are using AI to improve patient outcomes. We expect this will become increasingly prominent in patient care regimes, and as a competitive differentiator for companies.

The risks posed by AI in health care innovation

While AI has the power to supercharge health care innovation and patient care, this is not without risk.

Several studies have found that AI tools used by doctors may lead to poorer outcomes for women and ethnic minorities. AI tools have been seen to downplay the severity of female patients’ symptoms. Large language models (LLMs) were said to display ‘less empathy’ towards Black and Asian patients.11

This is largely down to the fact that LLMs are trained on data that reflects pre-existing biases.

There is empirical evidence that women and ethnic minorities are consistently underrepresented in clinical trials.12

While the problems highlighted by the studies above are harder to measure (downplaying severity, not displaying empathy), it is easy to imagine how gender and racial biases may have crept into AI training data. On gender, Naga Munchetty has compiled a tome of anecdotal evidence supporting the claim that doctors may not take female patients seriously.13

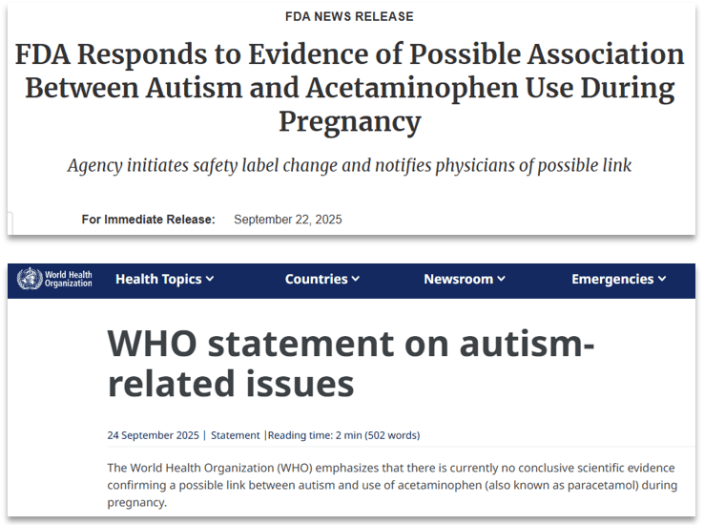

It is the responsibility of health care companies and physicians to be aware of the biases that may exist in these tools – and the responsibility of tech companies to negate them.

Most importantly, it is the responsibility of those in positions of power to safeguard objectivity in health care, and prevent biases and misinformation from entering training data in the first place.14

1 https://www.artificialgamerfilm.com/

2 A brilliant account of OpenAI’s history can be found in Empire of AI, by Karen Hao of the MIT Technology Review.

3 Stanford AI Index, https://hai.stanford.edu/ai-index/2025-ai-index-report

4 https://www.deloitte.com/us/en/Industries/life-sciences-health-care/articles/measuring-return-from-pharmaceutical-innovation.html

5 https://www.researchgate.net/figure/Erooms-law-the-number-of-new-molecules-approved-by-the-US-Food-and-Drug-Administration_fig4_326479089

6 https://pubs.acs.org/doi/10.1021/acsomega.5c00549?utm_source=chatgpt.com

7 https://www.fda.gov/medical-devices/premarket-submissions-selecting-and-preparing-correct-submission/premarket-notification-510k

8 https://hai.stanford.edu/ai-index/2025-ai-index-report

9 https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-enabled-medical-devices

10 https://www.siemens-healthineers.com/en-uk/radiotherapy/software-solutions/autocontouring

11 https://www.ft.com/content/128ee880-acdb-42fb-8bc0-ea9b71ca11a8

12 https://pmc.ncbi.nlm.nih.gov/articles/PMC10264921/ ; https://www.weforum.org/stories/2024/02/racial-bias-equity-future-of-healthcare-clinical-trial/

13 https://www.amazon.co.uk/Its-Probably-Nothing-Critical-Conversations/dp/0008686572

14 https://www.who.int/news/item/24-09-2025-who-statement-on-autism-related-issues ; https://www.fda.gov/news-events/press-announcements/fda-responds-evidence-possible-association-between-autism-and-acetaminophen-use-during-pregnancy